We're excited to announce that Remote Build Execution (RBE) for Bazel is now available in private beta on Bitrise. Starting today, select Bitrise workspaces can accelerate Bazel builds with scalable, parallelized execution on both Linux and macOS stacks.

This release is a major step forward for teams using Bazel, especially those managing large, complex codebases that require speed, flexibility, and reliability. By placing the Bitrise Build Cache, RBE workers, and CI runners within the same data center fabric, we are able to offer:

- Ultra-low latency with a high-speed internal network

- No egress fees since traffic remains within the data center

- The ability to scale builds horizontally across hundreds of workers

What is Bazel Remote Build Execution?

Remote Build Execution takes the work of building and testing off your local machine and distributes it across a remote cluster. Bazel builds often include thousands or tens of thousands of small actions such as compiling, linking, or running tests.

Without RBE, all of these tasks are limited by the resources of a single machine, whether that is a developer’s laptop or a CI runner. With RBE, work is split across a fleet of remote machines and executed in parallel, which significantly reduces build time.

This leads to faster builds and frees up local resources, making development environments more responsive and efficient.

Benchmarking: Envoy proxy build performance

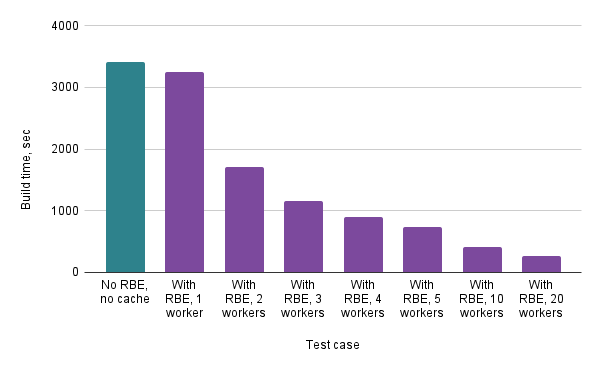

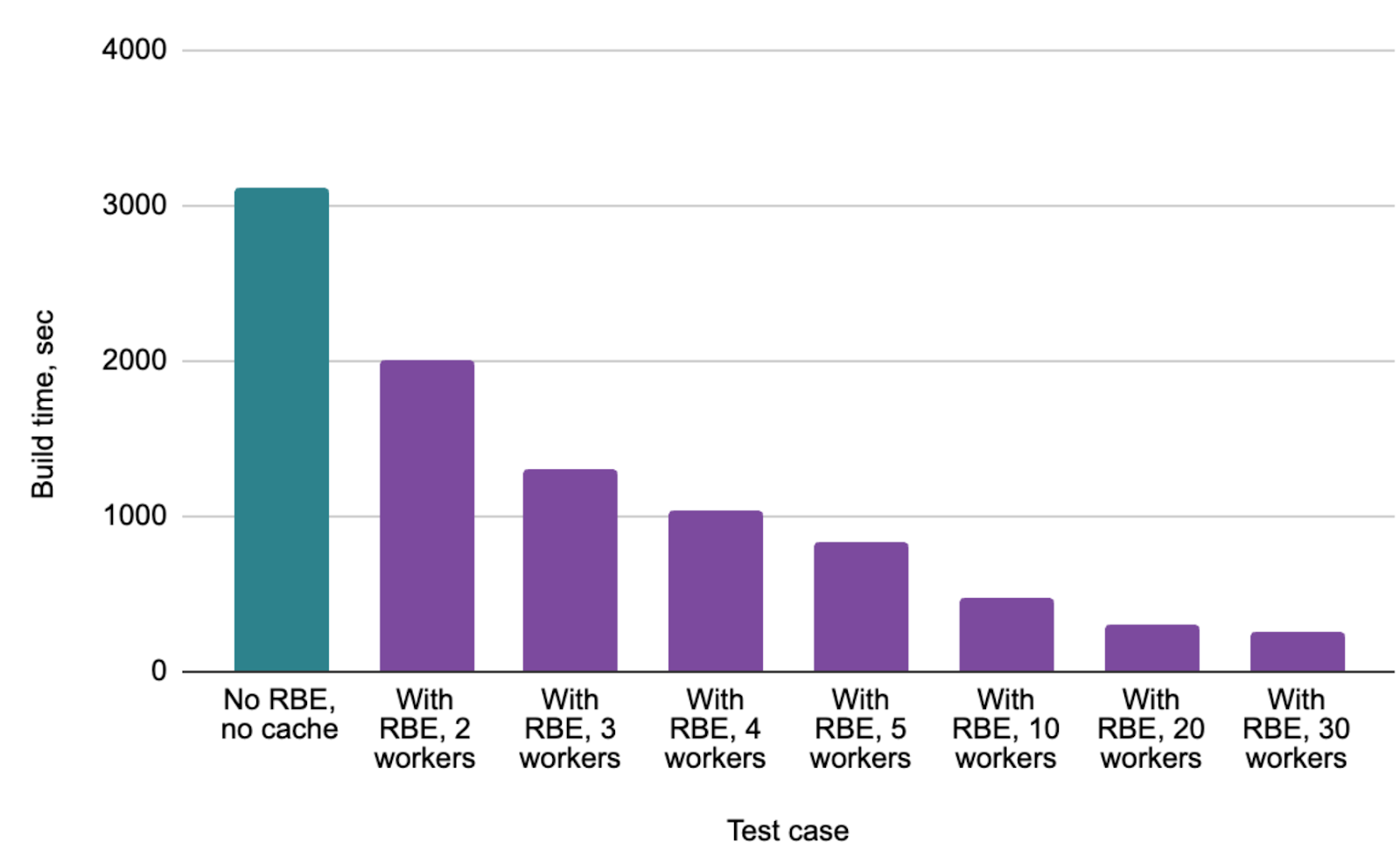

To demonstrate how RBE performs in practice, we benchmarked the open-source Envoy proxy, a large C++ project with a complex Bazel build graph. The builds were run on Linux and macOS, always starting from a cold cache, and using identical machine types for both CI runners and RBE workers to ensure a fair comparison.

We tested eight configurations, ranging from fully local builds to distributed execution using up to 20 RBE workers.

With only two workers, we saw a modest performance improvement, with build time cut in half. As the number of workers increased, the build time continued to drop. On Linux at 20 workers, the build completed in just 260 seconds, showing a 13x speedup compared to the baseline.

These results highlight the raw power and scalability of Bitrise RBE. Adding more workers results in faster builds while preserving build correctness and consistency.

Getting started with one-click worker pools

We are currently making RBE available for select users that apply via our in-app beta registration. If you’re not a Bitrise customer, you can register your interest here:

Once RBE is enabled, you can create worker pools directly from your project settings. Setup is straightforward:

- Choose an OS image such as Linux or macOS and select the machine size (medium, large, etc.)

- Set the minimum and maximum number of workers. The autoscaler will adjust the pool size within those limits

- Choose a data center region. Cache, workers, and CI runners are always co-located within the selected region

After creating a worker pool, it appears in your dashboard. You can monitor the number of active machines, view logs, and connect to machines via SSH if needed.

Keep in mind that creating a worker pool does not automatically route your builds through RBE. You will also need to:

- Update your .bazelrc configuration

- Enable the RBE flag in the Build cache for Bazel step

Full setup instructions are available in our documentation.

How it works

Our RBE system is built on Buildbarn, a reliable open-source implementation used by many organizations. We use it for both scheduling and managing worker execution.

For caching, we rely on our own Bitrise Build Cache, which is designed for high performance, multi-tenancy, and seamless scaling. All major components, including the scheduler, cache, workers, and runners, are hosted in the same data center. This setup reduces latency, improves reliability, and ensures full data sovereignty.

We also developed a custom autoscaler from the ground up. It continuously monitors the scheduler’s action queue and:

- Spins up additional workers in under a minute when demand increases

- Scales down to a single worker during idle periods

- Uses the same infrastructure and VM types as our CI runners

This level of integration helps avoid network-related errors and keeps performance consistent.

Ready to go faster?

Whether you're already using Bitrise or just looking to supercharge your Bazel builds, there are two ways to get started:

- Already using Bitrise CI? You can apply to the beta directly in the Build Cache interface.

- Not using Bitrise CI? Register your interest here and we’ll work with you to accelerate your Bazel workflows using Remote Build Execution on any CI.